Fast Axiomatic Attribution for Neural Networks

1Department of Computer Science, TU Darmstadt 2hessian.AI

NeurIPS 2021

Open Access | Preprint (arXiv) | Code

1Department of Computer Science, TU Darmstadt 2hessian.AI

NeurIPS 2021

Open Access | Preprint (arXiv) | Code

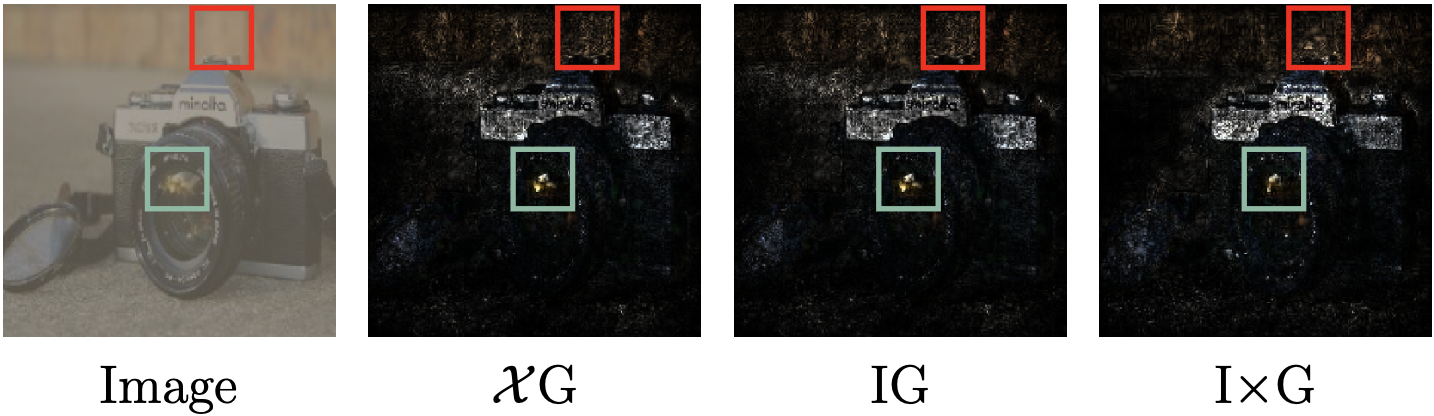

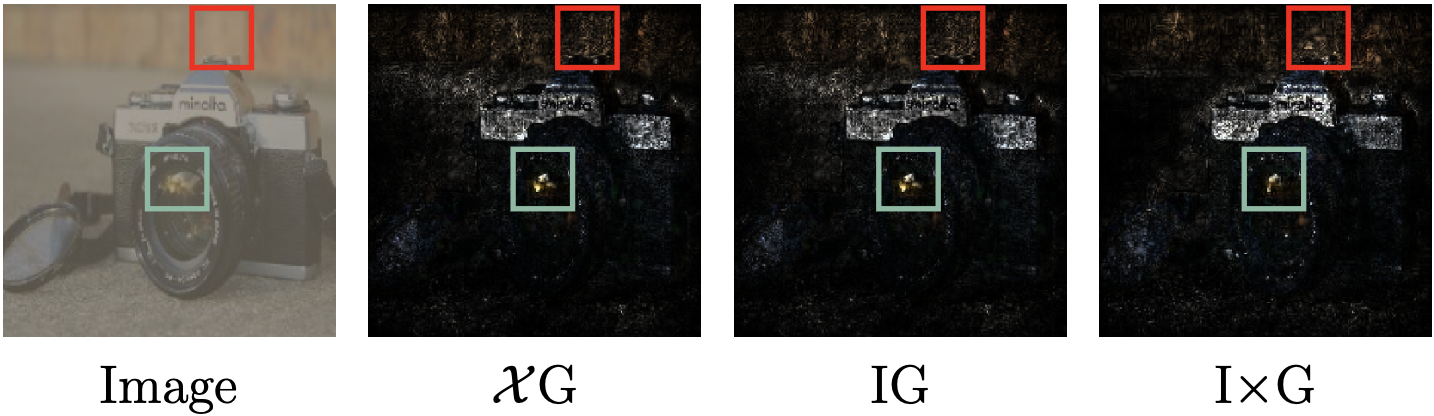

Abstract: Mitigating the dependence on spurious correlations present in the training dataset is a quickly emerging and important topic of deep learning. Recent approaches include priors on the feature attribution of a deep neural network (DNN) into the training process to reduce the dependence on unwanted features. However, until now one needed to trade off high-quality attributions, satisfying desirable axioms, against the time required to compute them. This in turn either led to long training times or ineffective attribution priors. In this work, we break this trade-off by considering a special class of efficiently axiomatically attributable DNNs for which an axiomatic feature attribution can be computed with only a single forward/backward pass. We formally prove that nonnegatively homogeneous DNNs, here termed 𝒳-DNNs, are efficiently axiomatically attributable and show that they can be effortlessly constructed from a wide range of regular DNNs by simply removing the bias term of each layer. Various experiments demonstrate the advantages of 𝒳-DNNs, beating state-of-the-art generic attribution methods on regular DNNs for training with attribution priors.

This project has received funding from the European Research Council (ERC) under the European Union’s Horizon 2020 research and innovation programme (grant agreement No. 866008). The project has also been supported in part by the State of Hesse through the cluster projects "The Third Wave of Artificial Intelligence (3AI)" and "The Adaptive Mind (TAM)".